Real-time “everything” with operational and analytics data

In the data world, nothing is as enticing as real-time everything:

- Real-time operational data

- Real-time analytics data

- Real-time data sharing

They’re often talked about, and more importantly, implemented, separately. But, what if they weren’t?

What if the worlds of operational data, analytics, and data sharing were (1) merged and (2) updated real-time with a unified version of the truth?

The art of the possible

Real-time operational data is the ability for data to flow between business applications for seamless business workflows. For example, real-time operational data enables customers to receive shipping confirmations immediately after placing an order.

Real-time analytics is the ability to have timely insights after operational activities happen. But, it’s often narrowly scoped to presenting targeted insights embedded, real-time, in operational processes for a singularly-focused question. These insights are not calculated real-time as a common setup for data warehouses is to sync operational data overnight, perform transformations and deduplication, and then calculate the insights. Because of this, these insights are often one to two days lagging behind “truthful” data. Moreover, incorporating these calculated insights back into the operational process requires building customized data integrations, yet still they can only answer the question at-hand.

For example, real-time analytics enables personalized product recommendations while buyers are viewing products. It may also incorporate summarized customer support history, providing the sales team with visibility to customer health during cross-sell opportunities.

So, what would it mean for these analytics and operational data worlds to merge?

- It means there can be an accurate, trusted, and versioned customer list available in real-time for any scenario where it might be needed.

- It means insights can be timely, not lagging 24-48 hours behind the real story, and insights can be more effectively and widely embedded in operational business workflows.

- It means the many (many) data integration paths and layers to sync data (and re-sync data), match customers, deduplicate, and recalculate core attributes can be stripped away

- It means the dividing walls between operational and analytics data usage — and the delays those walls create — can begin to disappear.

As lofty as this is, it isn’t that far fetched. But, because we’re realists, we’ll start with an appreciation for the complexity in bringing the worlds of operational and analytics together. Then, we’ll get real about what real-time data really is. (Hint: We start with language).

The complexities of operational and analytics data sharing

Analytics and operational data solutions all use data, all store data, and all move data. So, why have these functions been so separate to-date?

We’ll use the people-process-technology triangle as a framework to break down the divergent complexities between operational and analytical data. A picture is worth 1,000 words; a table, maybe 500. Let’s go with that.

TABLE 1: Unpacking the variation between operational data and analytics using the people-process-technology framework

| Operational | Analytics | |

| People |

|

|

| Process |

|

|

| Technology |

|

|

It’s not just the use cases for operational and analytics data that are disparate, it’s also…

- The platforms beneath them

- The data moving tools they leverage

- The type of data they use

- The skills of the people designing and building these systems

- The language the data resides in

What is truly the same across these worlds are the data entities they leverage: For example, the Customer, Product, Person. This data is needed across all the disparate operational and analytical systems. To-date, to solve this, either a spider-web of integrations is built to cross-sync data, or spot-solutions are developed to answer a targeted set of questions.Those approaches leave a disproportionate amount of code to operate and maintain for the value they provide. Over time, those custom approaches take away valuable cycles from development teams on either side of the operational and analytical divide.

Point made: It’s overly complex. But merging these two worlds with real-time data is more attainable than ever before.

To get real with enabling real-time, we’ll zoom into the technology pillar, particularly, the language of writing data: JSON vs SQL.

To merge both worlds, start with speaking the same language

There’s no argument that the way data is stored has been optimized for the necessary access-pattern: Keep operational data in JSON and analytics data in SQL.

But JSON and SQL can now communicate better; they’ve found their love language.

Many data storage solutions can now parse JSON using dot-notation and flatten functions, turning the key-value pairs into distinct rows. This is the immediate translation that transforms JSON into SQL.

However, anyone who has used Google Translate with idioms can attest, more than straight-forward mappings are needed. (Otherwise, we’re left perplexed on why someone might be chewing fat.) To be fluent in both, we must add in a metadata mapping of a JSON schema to an analytics-minded star schema. This creates a dynamic interpretation of key-value pairs into insight-enabling facts and dimensions.

Let’s do a quick example of this fluent translation: with a dimension attribute in JSON, use of a flattener translation, and SQL DDL to create a dimension:

JSON structured object, with the airline attribute coupon.AirlineDesignator as the target for the SQL dimension.

{ "$schema": "https://json-schema.org/draft-07/schema#",

"type": "object",

"properties": {

"Ticket": {

"type": "array",

"items": {

"type": "object",

"properties": {

"passengerNameRecordCode": {

"type": "string",

"description": "A unique passenger record",

"pattern": "[A-Z0-9]{6}"

},

"airlineCode": {

"type": "string",

"description": "The 2-letter code of the airline",

"pattern": "[A-Z]{2}"

},

"coupons": {

"description": "A list of coupons related to each leg of travel",

"type": "array",

"items": {

"description": "Information related to a coupon",

"type": "object",

"properties": {

"AirlineDesignator": {

"description": "The 2-letter code identifying the carrier for each coupon",

"type": "string",

"minLength": 1

}

}

}

}

}

}

}

}

}

JSON to SQL flattener that parses the value intended for the D_AIRLINE dimension and creates the SQL table

CREATE TABLE D_AIRLINE as (

SELECT DISTINCT

C.value:AirlineDesignator::varchar(255) AIRLINE_CODE

FROM ticket_object T,

lateral flatten(outer => true, input => ticket_object:coupons) C

);

That’s it. Once the dimensions and facts are identified as metadata, the language translation can fall into place. The world is your oyster in how analytics-driven insights and timely business operational data can coexist, both unlocking seamless customer experiences and reducing the need to maintain layers of spider-web integration code. Your operational and analytics data worlds can combine, empowering you with the ability to automate and accelerate data workflows across your business networks.

Let’s make it real

A real-time data platform must leverage the best-of-breed solutions behind the operational and analytics workflows while minimizing the stops-and-starts between them. By building on the power of Vendia Share, coupled with the data solutions that clearly used Duolingo for JSON, a real-time data platform is a real-time possibility.

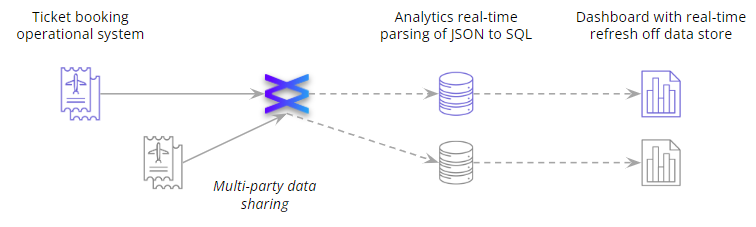

As an example, let’s look at a data sharing scenario where airlines share ticket data across their business network. In addition to operational sharing, they now want real-time analytics off this data — including only the data explicitly shared with them. This real-time platform is created by leveraging Vendia Share, an analytics-focused data warehouse that speaks JSON and query-on-demand dashboard solution. See Figure 1.

FIGURE 1: Vendia Share for real-time operational and analytics data sharing

- With Vendia, data can be shared, with control, across the entire business network.

- With a distinct, isolated, real-time streaming warehouse, the shared data is unlocked for analytics while maintaining the sharing rules already applied upfront.

- With a dashboarding solution that queries directly against data stores, the insights remain current with the latest loaded and transformed data. No more lag time.

Become a real-time convert

Real-time operational and analytics worlds can collide, and with that, unlock a new sense of data fluidity both within company walls and across business alliances.

- Learn more about Vendia: Check out our use cases and try out Vendia Share.

- Reach out to build a real-time proof of concept in 1 week.

Meanwhile, if you have real thoughts on real-time data, we’d love to hear from you in our guest contributor program. And join our community committed to real talk on real-time data sharing with our new podcast, Circles of Trust.